Mastering 2

What is Mastering?

Mastering is a vital part of the recording process, so much so that a substantial amount of ‘mystery’ is associated with it. We’ve all heard stories of high-priced mastering engineers with mystical, proprietary gear based on vacuum tubes salvaged from ancient Russian submarines… or something similar. Mastering is the last step in the record-making chain, where you can alter the overall tone and loudness of a recording, as well as the track order of an album.

To put it very simply, mastering is the polishing, the icing on the cake. This is the process of optimising the sonic characteristics of a track to present your music in the best possible light. Compressors, EQ and limiters are the main tools used at the mastering stage, and all the ‘rules’ we have discussed throughout the course will apply here again: monitors, environment, referencing to other commercial CDs, quality and characteristics of equipment used (valid for plug-ins), etc.

Prior to the digital revolution, mastering had a much- defined set of functions. You brought your finished mixes on tape to a mastering engineer, who would often bounce them to another tape through various signal processors designed to ‘sweeten’ the sound. The tunes would then be assembled in the desired order, and acetate test pressings would be made to evaluate the final product prior to mass- producing albums. Mastering was rightly regarded as an arcane, mystifying art. Few musicians had access to the high-end, expensive tools needed to do mastering, nor did they have the experience of someone who had listened to thousands of recordings and knew how to make them ready for the real world.

Nowadays most commercial releases follow the same process, the artist/producer/engineer/A&R turn up at a commercial mastering studio with the masters (DAT, 1⁄2 inch tape, Data CDs, Wav Files, etc.), and the mastering engineer first works on each track separately (compression, EQ, limiting, and others) by transferring the masters in a computer system through the processing we just described. Once all the tracks have been recorded and processed, they then start the process of track-listing and implementing gaps, fades or crossfades. They also attend to the level between each track. The final product is transferred to a master (i.e.1630 U-Matic, DAT, pre-master CD, DDP exabyte, vinyl or sound files) that will be sent for replication.

To understand this and how it relates to the situation today we need to examine the pre-DAW recording environment of the 80s and 90s. During this time the vast majority of recordings that went to mastering came from large, well- equipped studio facilities that were staffed by highly trained, experienced and skilled engineers. It was essentially a given that the 2-track final mixes sent to mastering were not going to need much, if any, corrective measures or balancing. Mastering studios armed with increasingly more sophisticated 2-track stereo signal processing tools and being handed well-balanced mixes, were therefore able to focus primarily on sonic enhancements. Great 2-track mixes resulted in great mastering – all was well.

In recent years, the rapid worldwide migration of much music recording from large, expensive studio facilities with highly trained and experienced staff to smaller DAW-based “artist” studios has started to have a profound effect on the traditional mastering process. While many of these smaller studios are well-suited for creating and recording music, they are usually not acoustically designed or staffed for precision audio mixing. As a result, mastering studios have to cope with more and more mixes that are musically sound but sonically unbalanced. As a result mastering studios look at solutions to deal with this problem.

Multi Band Compression

In essence, a multi-band compressor comprises of a set of filters that splits the audio signal into two or more frequency bands. Three- or four-band compressors are perhaps the best compromise between versatility and ease of setting up. After passing through the filters, each frequency band is fed into its own compressor, after which the signals are recombined.

The main advantage of multi-band compression is that a loud event in one frequency band won’t trigger gain reduction in the other bands, so when that loud kick drum comes along, instead of pulling the whole mix down with it, only the low-frequency sounds (kick and bass instrument) will be compressed, leaving the mid-range and high frequencies untroubled. Similarly, a loud event in the mid- range won’t affect the extreme high or low frequencies for the same reason. Multi-band compressors are great if you need a fair amount of compression over the mix as they tend to be more transparent. For these reasons multi-band compressors are quite widely used in mastering studios. However, with more parameters and more options at our disposal, a badly set up multi-band compressor can radically change the overall tonal balance of the mix. In my opinion, when it comes to buss processing, I find that a single-band compressor usually works best, and I keep multi- band compression for the mastering stage.

How Multi-band Compressors Work

Multi-band compressors share similar parameters to single- band compressors. Although the interface may differ from plug-in to plug-in, once you have mastered the basics you should be at home using any multi-band compressor out there.

Let’s take a look at Logic’s compressor and its controls. At first glance you may notice that the plug-in’s central window is divided into four sections. These represent different areas of the overall frequency range. Most multi- band compressors work from 20Hz – 20kHz. The number of bands they use varies and often they can be added or deleted. In most cases, the range that each band covers is also user-definable.

Each of these bands represents an individual compressor and has a full set of controls. All the usual suspects are here, such as ratio, threshold, attack and release settings. With your frequency band set, your compressor will only act on the specified frequencies. This is really ideal for treating a particular instrument or problem frequency in your mix.

Using Multi-Band Compressors on Your Master Buss

Multi-band compression isn’t specifically limited to use in mastering, but there’s no doubt this is where it’s utilized most. The ability to home-in on specific frequencies and treat them without colouring others is what sets multi-band processors apart from more basic single-band models.

With a decent multi-band compressor strapped across your DAW’s master output you will be able to tame subsonic frequencies, lower the level of problem percussion sounds, and bring rogue hi-hats and effects into line. All this can take place with varying amounts of compression, and each band can have different attack and release times, perfectly suited to the instrument in hand.

To home-in on each area, solo each band and identify the area you want to treat. Now set the compression so that a few dB of gain reduction is taking place when the instruments in that area are at their loudest. If you repeat this with each frequency band you should introduce a good amount of control that simply wasn’t there before.

For example, if I’m working on a track where I feel the bass end is lacking in weight, I may use a higher threshold and a higher ratio to bring up the average bass level, but still use subtle low-ratio settings for the mid-range and high end. Similarly, by applying heavier compression to frequencies above, say, 6kHz it is possible to increase the high-frequency density to create an effect similar to that of using an enhancer.

It’s worth remembering that when you’re using any kind of compression on your final master, it’s wise to aim for a ‘transparent’ result. This basically means you are aiming to solve any mix issues without it being apparent that there is any compression being applied.

This usually means opting for long attack and release times, low ratios and small amounts of gain reduction. If you find yourself having to use very heavy settings to get the desired effect, you may want to look at the original mix and make some changes. Of course, you may be looking for a very compressed sound as a creative effect, but this will be at the cost of your dynamic range.

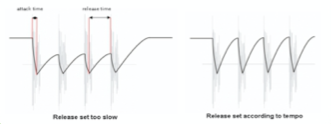

Dealing With The Time Constants

Where the attack and release settings are independently adjustable, try to judge each band on its merits. For example, you might use a moderately fast attack time on the bass end because very low frequencies have no fast transients to compromise. This brings the level under control reasonably quickly. You should then set the release time as short as you can get it without any audible gain-pumping being evident. As a rule, the busier the music, the faster the release time you need to ensure the compressor’s gain resets itself between notes. However, low frequencies tend to hang on longer than high frequencies, so you may need a longer release time at the bass end than in the mid-range. As a starting point, setting twice the release time at twice your setting for the mid-band might be reasonable, though if you prefer to keep it simple, you’ll probably get reasonable results by starting off with the attack and release times for all three bands set the same.

The mid-band covers the majority of what we perceive in a musical mix, so this can be set up much as you’d set a full- range compressor. In other words, use a slower attack time if you need to enhance transients, and use a fairly short release time, but not so short that you hear pumping.

What you do in the high-band depends on the result you’re after. If the plan is to increase the density of the high end, use a higher ratio setting to push up the energy, and possibly a faster attack if you need to prevent the transients from becoming too pronounced. High transients tend to decay faster than low frequencies, so you may be able to use a faster release time than you did in the mid-range, but listen carefully for any pumping effects or other unnatural gain changes, and increase the release time as necessary to get rid of them. If you’re using a compressor that allows you to solo each of the frequency bands, setting the release time may be easier, but as a ‘ball park’ figure, use half the mid-range release time as a starting point.

Multi-band compressors tend to be used early in the chain (I tend to insert it first), i.e. to bring out lost elements in the mix, then you can follow with EQ and limiting, for example.

Using Multi-Band Compressors in the Mix

Multi-band compressors can be just as useful in the mixing stage as they are in mastering. Obviously there is not a lot of point treating simple, monophonic sounds with a five-band processor, but groups of instruments can really benefit from this sort of compression.

With a multi-band compressor applied to your drum buss you can treat separate drums. Low- and mid-frequency drum parts can be attenuated without affecting any other area of your drum mix. This sort of treatment will also give your drum sound a cohesive quality that is often hard to achieve with a number of single-band compressors.

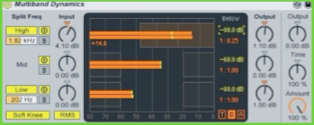

(Logic’s Multipressor Doing its thing on a Drum Bus)

This technique is not just limited to drums and can work with any number of grouped instruments, such as guitars, vocals or even synth lines. As long as the contained sounds have a defined, unique frequency, then the multi-band compressor will be able to clamp down on them.

Multi-band compression is also useful when a number of instruments have been bounced down to one track, where you have lost the ability to tweak individual settings. When using loops from sample libraries you’ll probably find this indispensable.

Multi-Band Compressors as Dynamic Equalizers and More

(Ableton Live’s Multiband Reducing Reducing High Frequencies in Vocals )

Most multi-band compressors also allow each band to be boosted in volume. This boosted area can then be compressed to bring it down to its original level as it fires. This mixture of amplification and attenuation is commonly known as dynamic equalization, and if the right balance is struck it can give you a really pleasing result.

When this technique is employed, areas of the mix that contain small amounts of the frequency band in question will be boosted and higher gain areas will be compressed. This results in a more even sound and can really open up a mix that is perhaps a little dull.

Multi-band compressors can be used for de-essing. Very high sibilant frequencies can be homed-in on and compressed when present. This is essentially what happens in the majority of de-esser plug-ins, but this way you actually have more control over the end result.

These examples show that multi-band compressors can be used in a number of situations besides mastering. It’s well worth getting to grips with this technology.

Choosing Crossover Points

Multi-band compressors often allow the user to adjust the crossover points, so if we take a three-band compressor as an example, where is the best place to set them? The answer is that it depends on the type of material being processed and on what adjustments are needed. OK, I know that isn’t very helpful, so let’s narrow it down a bit and assume we’re processing a full mix of a typical pop song. Setting a crossover point in the middle of the vocal range for example, can mess up the vocal sound, especially if you use radically different compression settings on each side of the crossover frequency.

Generally it is best to set the low crossover point below the vocal frequency range and set the high crossover point no lower than 2.5kHz. A sensible low-frequency setting might be 120Hz, as this is below the vocal range, but above most of the deep bass and kick drum elements going on in the rhythm section. These are only suggested starting points and, as a rule, I’d suggest moving the high crossover point higher than this if you plan to do more than very subtle high- end processing, as it’s easy to over-emphasise the 2-4kHz area of the spectrum, which is where things can start to sound harsh. By contrast, if you restrict heavy processing to above 6kHz, you can significantly enhance the sense of detail and air, without affecting the crucial mid-range and disturbing the natural reproduction of vocals and many acoustic instruments.

Preparing Your Tracks For Professional Mastering

Apart from getting the music sounding right, it is just as important to supply the mastering engineer with the right information so that they can proceed with the job satisfactorily. Certainly any audio files sent to the mastering house prior to the session require detailed labelling so that they can be properly identified, but anyone attending a session also needs to have all the necessary information to hand. If ISRC codes are being used then, in the UK, these need to be obtained from Phonographic Performance Ltd (PPL) in advance so that they can be allocated to each track at the end of the session.

It is not recommended to start your mix with your ‘polishing’ buss processes in place, as you’re likely not to work as hard in getting the basic mix right at the first place. It’s important to do the very best you can with your mix before you switch in any buss ‘polishing’, otherwise the final result might suffer.

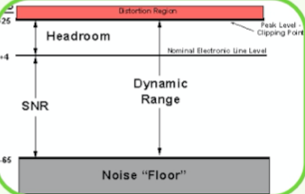

If you’re unsure about processing the whole mix, leave it untouched. Remember it’s easier for a mastering engineer to work from an unprocessed mix than an over-processed one. Don’t add any fades while mixing — fades and crossfades should be done while mastering, when you have a better sense of the ideal fade time. As you mix, you should also watch closely for distortion — a few overloads may not be audible as you listen to the mix, but may be accentuated if you add EQ or limiting while mastering. It’s better to concede a few decibels of headroom rather than risk distortion. As a general rule, 3/4dB headroom is a good average, leaving headroom for the mastering process.

It’s not necessarily a good idea to add normalisation, as that means another stage of DSP (which may degrade the sound, however slightly) — and you may need to change the overall level anyway when assembling all the mixes into a finished album.

As for trimming the starts and ends of tracks, it’s better to leave a bit of space at the beginning and end of each mix, and to do the final trimmings at the mastering stage.

Master Mix Formats

Back in the early days of home studios, there was little choice when it came to recording your stereo master – the serious guys used a Revox quarter-inch tape machine running at 7.5 or 15ips (inches per second) while everyone else got by with domestic open-reel tape recorders or even cassette decks. Even today, those old Revox machines can give fantastic results, but now they compete with DAT, Minidisc, CD-R, CD-RW and hard drives as a medium for your mixes.

If you plan to take your mixes to a Mastering studio, BE AWARE of the formats they accept, as you don’t want to turn up for the session only to find out that they can’t play your mixes on their system. Most professional mastering studios will accept a wide range of formats to master from, as well as for producing mastered versions that will be sent to the replication factory. Smaller CD mastering studios will probably mainly deal with DATs and CDs (maybe ADAT/Tascam). Which formats you choose will depend on your need and budget. However with a DAW in home studios most mixes are recorded ‘in the box’.

As I have mentioned several times before, most DAWs offer the possibility of working and recording at 24 bits. If you choose to work in this format (which you should really) you should stay in this format until the very last stage, which means that you should record your final mixes at 24 bits as well. The same applies if you work at sample rates higher than 44.1kHz, such as 48kHz, 96kHz and 192kHz.

Note that in this situation you should bring a data CD containing your final mix (in the original format, as mentioned previously). Do not bring an Audio CD, as that means that you will have already converted the mix to 16 bits. Also make sure to check with the mastering studio which audio file formats they accept – wav., AIFF, SDII, interleaved or split stereo, 24bit (or 32 bits even), and the sample rates. All of these may differ from studio to studio.

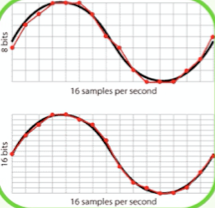

16 bits vs. 24 bits

Recording your final mix in a 16-bit format is fine if it is your final mix, but most of the time you’ll need to do some post- mix mastering, even if it’s only adjusting levels or adding a bit of EQ and compression. Every time you process digital audio, a small amount of resolution is lost due to scaling or rounding-up/down errors, so in an ideal world it’s better to record at more than 16 bits, then dither down to 16 bits at the very end of the mastering process, just before preparing your CD master.

How much this loss of resolution matters depends to some extent on the dynamic range of your music. When digital data is subjected to a loss of resolution, the effects are felt most at very low levels where fewer bits are being used to represent the signal. It follows then that classical or acoustic music with a very wide dynamic range is most at risk, while pop music with its limited dynamic range is least likely to be affected in any audible way. Many people believe that one of the advantages of working at 24 bits is that the top end is clearer, but actually it’s probably the bottom end that benefits from the extra bits as it becomes punchier and clearer.

BUSS Compression

vocal lines will still have gaps between phrases, and instruments may come and go according to the arrangement of the song. The outcome is that the overall level of a typical pop mix still fluctuates according to what is and what is not playing at any given time.

Because the dynamic characteristics of a complex mix can vary considerably over the period of a track, a compressor that automatically sets suitable attack and release times is often easiest to use in this application. If your compressor doesn’t have an auto mode, try an attack time of around 20ms and a release time of around 300ms – but experiment with these values because every make of compressor responds differently. Use a low threshold in conjunction with a low ratio to trim a few dB off the original dynamic range, and you should find that the impression of energy and mix integration increases. What’s really happening is that the pauses between vocal and instrumental lines, as well as the gaps between drum beats, are compressed just a little less, which means that the level of the backing track is constantly adjusting itself to maintain a more even overall level. If this were overdone, there would be audible gain pumping, but kept down to two or three decibels, the subjective result can be very musical and can often help prominent parts, such as vocal lines, sit better within a mix.

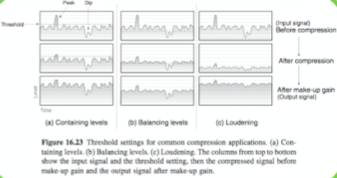

The first use of compression is for controlling signal peaks, so if you want to reduce peak levels without affecting the dynamic range of the rest of the recording, the usual approach is to set a threshold that’s just above the average music level. This way only the peaks are subjected to gain reduction, and the more compression you wish to apply to those peaks, the higher the ratio you’ll need to set. As a rule, ratios of between 2.5:1 and 8:1 are used for this kind of work.

A practical way to set the ratio control is to watch the gain- reduction meters as you vary the ratio and aim for a maximum gain reduction of between 8dB and 10dB. However, it’s still vital that you listen carefully to the processed signal to see if it sounds the way you want it to — meters can only tell you so much, and if the peaks start to sound squashed, you’ll probably need to either reduce the ratio or increase the compressor attack time.

As a rule, a hard-knee compressor will give the most positive results in situations where the signal peaks are in need of assertive control, and a compressor with a peak-sensing side-chain mode will track peaks more accurately.

The second basic way in which you can use a compressor is for compressing the dynamic range of an entire signal, not just the peaks. In this case, it’s usual to set a very low ratio of between 1.1:1 and 1.4:1 and to set the threshold at around 30dB below the peak level. Soft-knee compressors work well in this role and gentle overall compression is commonly used in mastering or for processing sub-mixes. Conventional RMS rather than peak-sensing would be the norm for this type of job, though don’t let that put you off experimenting, as different makes of compressor can behave very differently.

Most of the compressors we use in music production operate on what is known as the full-band principle, where the entire audio signal is processed via a single gain-control element. A common problem arising from this method of compression is that a loud kick drum (which produces mainly low frequencies), will trigger the compressor and consequently pull down the gain of everything else that happens to be passing through the compressor at the time, even though those other sounds might not need compressing. If the compressor is acting on a solo kick drum track, then clearly this isn’t a problem, but if the whole drum kit is being compressed, the high-frequency sounds, such as hi-hats and cymbals (which carry relatively little acoustic energy), will be compressed along with the kick drum, the outcome of which is often a subjective dulling of the sound during times of heavy compression.

The situation worsens when you’re compressing a whole mix, because the low-frequency sounds in the mix determine the compression applied to everything else, so what tends to happen is that the kick drum and bass line dictate how the mix will be compressed. One way to disguise this aspect of full-band compression is to set a slightly longer attack time so that transients can pass through cleanly before the gain reduction takes place, but clearly this does little to control peak levels, which is important when you’re working with digital systems that can’t tolerate overloads, however brief. In some situations these side effects can be musically desirable, and in some forms of rock and pop music a hint of gain pumping (audible compression due to rapid gain changes) can add energy and excitement, providing it isn’t overdone

Separation Mastering

This technique involves submitting the final mix from the recording studio as a small collection of

‘separations’ (typically 4 audio files, e.g. vocals, drums, bass, remaining instruments).

The mastering engineer can now adjust major elements without compromise and then sum them together to create the final master. The Separation Mastering process also eliminates “alternate mixes” (since the Separations are continuously variable), eliminates costly “recall” or remix sessions (since mixes don’t have to be rejected by the mastering house, virtually any reasonably close mix can be salvaged and enhanced), and the final masters have been shown to be consistently more clear and detailed due to, among other causes, the re-rendering with a higher end, low-jitter mastering studio digital clock.

The biggest possible drawback to this method is giving the mastering engineer too much control over the respective levels (for example, vocal volume in relation to guitar volume.) For this reason, the mastering engineer, to ensure that the original mix intent and musical proportions are adhered to, usually uses a copy of the artist’s original stereo mix as an A-B reference.

Here are some simple steps to create ‘Separations’

Start by making a folder on your hard drive and label

it with the name of the song you’re working on [Your Song].

1) Simply record your normal completed 24-bit stereo mix. Name the file [Your Song] Mix.aif (or wav, SDII, etc.) and put it into the [Your Song] folder.

Then, without changing any levels:

2) Mute (or disable) all tracks except the drums and make a separate 24 bit stereo recording of just the drums (with all related effects). Name the file [Your Song] Drums.aif (or wav, SDII, etc.) and put it into the same folder.

3) Mute (or disable) all tracks but the bass – record the bass separately (in 24 bit stereo with all related effects). Name the file [Your Song] Bass.aif (or wav, SDII, etc.) and put it into the same folder.

4) Mute (or disable) all tracks but the remaining instruments – record the instruments in 24 bit stereo separately with all related effects. Name the file [Your Song] Instr.aif (or wav, SDII, etc.) and put it into the same folder.

5) Mute all tracks but the vocals – record the vocals separately (24 bit stereo with effects). Name the file [Your Song] Vocals.aif (or wav, SDII, etc.) and put it into the same folder.

Remember ‘separation’ mastering is not mixing – all decisions like flanging, compression on lead vocal, eq on kick drum, etc… are done at the mixing stage.

‘Separations’ files are often wrongly referred as ‘stems’. Here’s a quick reference for you:

Stems are for a variety of uses – stems are also called groups (subgroups or sub-masters) and are used in different applications. You might use 5 to 20 stems for mixing 24 to 80 tracks, like we discussed with backing vocals (but you’ll probably only want to make 4 to 8 Separations for mastering).

· Stems can control a larger number of faders with a single stereo fader

· Stems are used for video, film, live sound and large-format mixes

· Stems are used to sum to stereo “outside the box” via console or analog summing box

· Stems may or may not include reverb or effects returns

(some engineers do not group their effects returns into their stems)

More DAW-based mastering studios have started to use this technique, and it might be a good option if you plan to have your tracks mastered for commercial release, but your mixing environment and/or lack of experience prevents you from achieving the mix you want.

However if you’re really happy with your final mixes, then you’ll probably end up delivering a two-track stereo mix, the advantage of this is that you have more control over the overall balance, and if a mix sounds great, well, there’s the old saying: “If it ain’t broken…”

With more and more mastering tools available for DAW and the race to ‘Loudness’, it can be tempting when mixing at home to just ‘slam’ the mix with a maximizer or multi-band compressor, but if you plan to get the track mastered professionally, I would strongly recommend not to do it. If you squashed the hell out of it yourself, there’s not much the engineer will be able to do. There was a trend early 2000s, with the apparition of devices such as Finalizer by TC electronics, where mix engineers would insert a limiter or multi-band compressor across the whole mix, squashing it effectively. I’ve heard quite a few mastering engineers complaining about that, as they had nothing left to work with. However, it is quite a common practice to ‘process’ the whole mix, using EQ and/or compression, as we discussed in our first chapter.

Home Mastering

Today, the tools for quality mastering are finally within the financial and technical reach of anyone who’s serious about recording. However, 95 percent of mastering is not in the tools — it’s in the ears. Unless you have the ears of a mastering engineer, you can’t expect any plug-in to provide them for you. Besides, much of the point of using a mastering engineer is to bring in an objective set of ears to make any needed changes prior to release – which is why most commercial releases tend to be mastered by professionals, in professional facilities.

So where does it leave us? Does this mean only experts should attempt to do mastering? No. Firstly, not all mastering situations require a professional’s touch. If you intend to play demos to get a deal, there’s a strong argument for mastering your tracks to give the listener a better experience. Nowadays, if you’re looking to release a dance track on a small independent label, the label will expect you to deliver a finished master (a stereo mix) which they will send to a mastering studio. So in order to help get a deal, by way of illustration to the companies, it is common practice to do some home mastering. Furthermore, the only way to get good at anything is practice, and with ever- improving technology, we now have very decent tools in our hands to do a good job.

Because most home projects are realized within a sequencer package such as Cubase SX or Logic, there is a trend that has seen the mixing and mastering process occur simultaneously. There are advantages and disadvantages to this method. I personally prefer to get my mix as good as possible and then treat the mastering as a separate process (old school). However, mastering while you mix means you know exactly what the final version will sound like.

But remember that a huge part of conventional mastering is about involving someone who can be more objective about what needs to be done with your music. Even if this person turns out to be you, at least you can approach the mastering with fresh ears by undertaking this process at a later stage.

Home Mastering is generally performed within a DAW, with plug-ins taking care of the processing. Although it is often done with specialised digital audio editing programs such as Sonic Foundry’s Sound Forge, Steinberg’s Wavelab, Bias’

Peak, Adobe’s Audition, Apple’s Waveburner, or Pro tools, SADIE and Sonic Solution for the high-end users. You can also use conventional multitrack recording programs, such as Cubase SX or Logic for example.

So what are the main tools used for mastering? Dynamics and EQ cover 99 percent of what’s needed in most cases.

Some Basic Steps

The more traditional approach is to take each tune, master it, then as a separate operation, assemble all the tunes into a cohesive whole. A newer approach is to assemble all the tunes first and then apply any processing on a more global level. Basically, this combines both mastering and assembly into one operation. We’ll look at the ‘individual song’ approach.

Open up a new file and import the mix onto a stereo track. If you need to process the right and left channels independently (for example, if there’s an instrument in the left channel that has excessive treble, and you want to EQ just that channel a bit without processing the right channel), then separate the stereo file into two mono files (typically using a digital audio editor) and import each one into its own track.

Before heading to your EQ and limiters, you might want to consider the possible need for noise reduction. Sometimes there may be a slight hiss, hum, or other constant noise at a very low level. If you can obtain a clean sample of this sound, it can be loaded into a noise-reduction program that mathematically subtracts the noise from the track. Even if this noise is way down in level, removing it can improve the sound in a subtle way by opening up the sound stage and improving stereo separation.

Reduce Peaks Using Automation

If some peaks are significantly louder than the rest of the material, this reduces the chance to have a higher average level, as the peaks use up much of the headroom. One solution is to add limiting, but another option that can affect the sound less is to use an automation envelope to reduce the levels of just those peaks. If the automation works on just a single cycle of the waveform, you probably won’t hear any difference compared to not reducing that peak, but once the major peaks are reduced, you’ll be able to raise the overall level. Furthermore, if you do add any compression, it won’t have to work as hard.

EQ and Mastering

EQ in mastering is often used to tuck away annoying frequencies and to enhance the frequencies that make the recording special. This usually involves boosting the fundamental range for songs that require a fatter sound and doing the opposite for songs that need to lose a bit of weight. Using EQ to enhance certain frequencies also often involves boosting upper harmonics on songs that need to sound a little more exciting and to bring out the ‘air’ characteristics so that there is a more ‘glossy’ and ‘polished’ sound overall.

For mastering, you’ll hopefully be dealing in broad strokes — a mild bass cut, or a little high-end lift. This is why many older equalisers are favored for mastering, because they have a subtle, yet pleasing, effect on the sound. Plug-ins like Steinberg’s TLA1, PSP’s MasterQ, and the UAD1’s Pultec emulation fulfil this role in software. Significant EQ problems, like large mid-range or low-end peaks, should have been fixed in the mixing process. If they weren’t, you’re likely to need to plug in a full-blown parametric EQ, and tweak out the individual problems.

Frequencies Guidelines

- Boosting bottom end for a warmer and fatter sound. 60 – 150Hz around where the bass and kick drum are.

- Boosting midrange for more presence and bite, if needed, although be careful here as the mid range covers the majority of what we perceive in a musical mix. 900Hz – 3kHz

- Boosting hi-mid frequencies will also bring bite and clarity. Also be careful as overdoing it will bring harshness and emphasize sibilance. 4kHz – 8kHz

- Boosting around 10kHz will help a mix a lot if it’s a bit on the dull side.

- Boosting around 12kHz will bring ‘air’, making the mix more ‘open’.

- Notching at around 150Hz – 400Hz will help a muddy mix, removing boxiness.

- Notching the mid / hi-mid will soften a mix which is too harsh.1kHz – 3kHz.

- Also it is very common to apply a hi-pass filter around 20Hz – 30Hz. This can help a great deal to tighten up the bottom end.

- In the case of mastering for broadcast, the bandwidth of the signal has to be reduced. For example, for TV broadcast apply a high-pass filter at 80 Hz with

-18 dB/octave to filter out low frequencies, and apply a low-pass filter at 12 kHz with -9 dB/octave to filter out high frequencies.

Limiters

changed at all. Any input signal that exceeds the threshold is ‘limited’ to that level.

Limiters are often used to protect transmission chains, such as in broadcasting and CD mastering to prevent peak distortion. In digital systems, as opposed to analogue tapes, this is very important, as there is no warning of distortion – the signal is ok until it reaches 0dBFS, and then it clips in an ugly fashion.

In addition, using a limiter allows us to increase the loudness of the audio while maintaining the same peak level. This process is being used more and more – with CD mastering, every one wants the loudest CD.

Limiters, like compressors, come as two basic types: single- band and multi-band.

As a general rule, you tend to get a more transparent sound with multi-band dynamics when pushed harder, where a single-band might give you the ‘pumping’ effect.

Limiters should be placed as the last plug-in in the chain, as they will prevent peaks going above a set level.

The more limiting you add, the louder the quietest parts of the mix will be; when pushed at the extreme, all parts will be loud. How much limiting you need to apply depends on the genre of music you are mastering. Where ‘classical’ music will require gentle and transparent limiting, modern music productions can tolerate more of a ‘squash’. However, be careful how hard you are pushing the limiter, as although designed to be ‘transparent’, in reality different devices will give you a very different result in relation to the music content and the desired ‘loudness’.

So if you have a few different plug-ins, it might be worth comparing them. Working at the same level, you will find that some might be harsher than others, or start adding a slight distortion at certain levels.

How Loud?

There is a trend today to master music as loud as possible. In fact it has become the industry standard, and people now believe that a quieter CD doesn’t sound as good as a louder CD. Obviously to get a ‘loud’ CD, the easy way is to insert a limiter and push it hard (+9dB), but there is a danger of squashing the dynamic range too much, squashing all the frequencies closer together, hence losing life and frequency range. This is why you shouldn’t rely on a limiter to correct frequency balance problems in your mix, as you will get a very unnatural result. The average ‘level’ in modern music production (pop, hip hop, dance, etc…) is from 6dB to 9dB. I find that some homemade mastered CDs that have been pushed higher sound extremely tiring to the ears.

Master Fader

Although most modern audio software packages use 32-bit floating-point audio engines and have lots of headroom, overloading can still occur unless levels are set properly, especially if the master buss is the sum of different channels. Clipping indicators are helpful, but programs that include a numeric read-out of how much a peak level is above or below 0dBFS are far more useful. This value, called the ‘margin’, is positive if the level is above 0dBFS and negative if below. If possible, enable any kind of peak-hold feature so that you can see the highest level attained at the end of a song without having to keep your eyes glued to the meters. Note that if the margin indicator isn’t reset automatically (when you click the transport stop button, for instance), you’ll have to clear the value manually from time to time.

The faders themselves should also be calibrated. Here’s an example of how to use this feature:

Suppose the fader is currently set to 0dB gain, and you send in a signal that reaches

-3dBFS. The margin indicator will also show -3dBFS. If the master fader setting is

-1.5dB and you feed in the same -3dBFS signal, then the margin indicator would show -4.5dBFS. This figure is the original value minus the amount of attenuation provided by the master fader.

In mastering situations, the limiter output setting will enable you to set the desired maximum ‘level’. Although you can technically set the desired level at 0dBFS, it is recommended to set it at a slightly lower level, typically – 0.1dBFS. This is important, because if a tune has peaks that hit 0dBFS for more than a few milliseconds, it may be rejected by a CD pressing plant, on the assumption that those peaks represent distortion.

Dithering

Ok so now you have your final master, still in high definition (24bits/whatever sample rate you choose to work at). You now need to convert it to a 16 bits / 44.1 kHz file in order to create an audio CD.

We’ve discussed earlier in the course why we should record and mix our project at 24bits if possible, and a higher sample rate if available 48kHz / 96kHz. But what do you do to make sure your pristine 24bit/96Khz master sounds good at this lower rate? This is where the practice of dithering comes into play.

Most producers only really think about dithering when bouncing down the final master of a recording. This is the very last stage of production, usually converting a 24 or 32- bit stereo file to a 16-bit file for encoding to CD. However, dithering is actually extremely common in the digital domain – it’s just that it is usually handled automatically by the various converters, processors and algorithms in the studio, and remains very much behind the scenes. Dithering is usually applied by any process that transforms the signal in some way.

What is dithering?

To put it bluntly, dithering is the adding of noise to an audio signal, with the aim of reducing unpleasant distortion.

The first thing you need to understand is that whenever we go from a high-resolution signal to a low-resolution signal, there is going to be a quantization error as a result.

In audio, what we’re trying to avoid is the audio signal being “not fluid” because of truncation errors.

In the digital domain, low-level signals are particularly susceptible to finite word-length artefacts. The lower the bit- rate, the more prominent these artefacts become. In a 16- bit recording, we have a 96 dB dynamic range with which to encode music. This is great for loud signals. However, when the amplitude of the audio decreases, we have fewer bits available to encode the waveform – for example, during a fade out, the available bits reduce gradually until we only have one bit to encode with.

So to minimize this effect, we need dithering. The concept of dither is to add some random noise to the waveform in order to “break up” the statistical determinability of the stair stepped waves – to put it simply to make the truncated waveform more fluid.

We do this by literally adding noise, typically white noise.

Relating to distortion

For any given bit-depth, the quieter the signal, the higher the level of relative distortion. To avoid noticeable distortion in low-level signals, it is preferable to add some smoothing noise to the signal – it’s a trade-off, adding mildly undesirable hiss in order to remove far less desirable harmonic and intermodulation distortion.

There are a huge variety of algorithms out there that calculate this noise in slightly different ways – this is what makes one dithering process sound better (or worse) than another. However, even the simplest dither is preferable to truncation. The dithering effectively spreads and rounds the errors across a broader range by creating broadband noise around the least significant bit.

It’s always best to produce music at least at 24-bit quality – this provides 144 dB of dynamic range, and ensures that dithering artefacts are effectively inaudible. Any reduction to 16-bit should only be done as the final step in the process – and should always be performed using dithering.

The bottom line is that WHENEVER a signal goes from a higher resolution to a lower resolution it is necessary to dither in order to avoid the artefacts provided by truncation that have been shown above. This means that whenever signals go from 48-bit resolution for processing to 24-bit resolution, or 24 bit resolution for mixing to 16-bit, or even analog (infinite resolution) to 24 bit during A/D conversion, dithering needs to happen. In the case of the analog conversion process this dither happens naturally by means of the thermal noise within the converters.

This all gets much more complicated, however, when we start to discuss other dither algorithms used for particular applications. Above, we described the use of white noise to dither, but the concept here is that it would be possible to add noise other than white noise that we humans might find even less offensive than true white noise. We humans are more sensitive to certain frequencies than others, so it would be possible to filter the noise so that it was more concentrated on frequencies that were less offensive. There are several algorithms on the market at this point from many manufacturers for adding unique dither to your audio. POW- r (used in Logic, Peak, etc…), DitherCD, and IDR (used by Waves, for example) are all examples of these types of algorithms. Apogee uses a scheme called “UV22” (available in Cubase SX) which focuses most of this random noise at frequencies above the human hearing range. The “22” comes from the fact that the majority of the noise occurs in their algorithm at 22kHz.

With the use of plug-ins nowadays, you might have several plug-ins using different algorithms to dither, leaving you wondering which one is best for the job. I find that a good test is to listen to a quiet moment in the audio you’re dithering, i.e. reverb tails or a fade out, at a fairly high level on headphones. Some will be grainy, some noisy, and some distorted, indicating improper dithering or poor calculation. The winner of this test should be your choice of dithering processor.